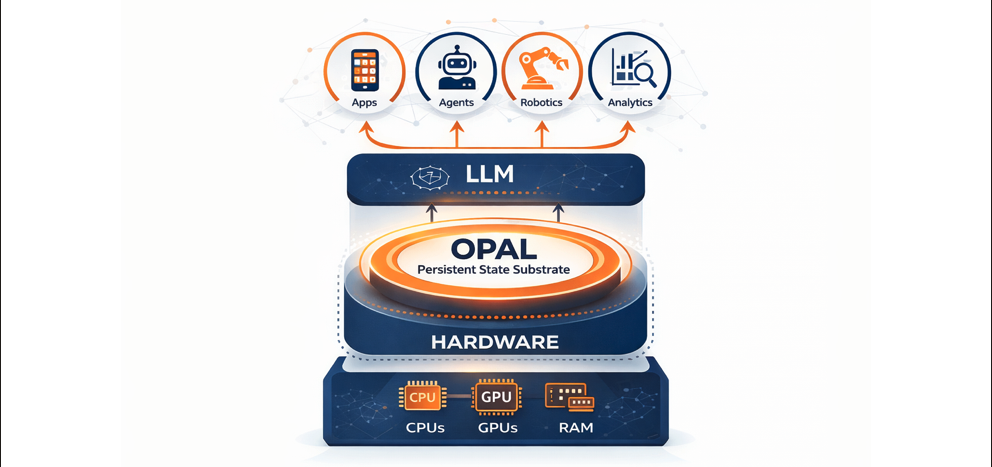

Opal One v2.0 — Persistent state infrastructure above the compute layer.

“Opal One plays the role that file systems and process managers played for operating systems: it makes persistent state visible, navigable, and cheap to operate without modifying the underlying compute.”

The AI Entropy Tax is over. Welcome to the Compute Ice Age.

For the last two months, engineers have been reviewing Project: OPAL | ONE to evaluate a single question: Can AI memory scale without proportional energy cost?

Today, I’m releasing a 24‑page technical paper documenting the empirical validation of a deterministic memory substrate that decouples semantic state from probabilistic re‑inference.

The Problem. Modern AI systems pay an “entropy tax.” Energy consumption scales with total memory size (M), not the semantic change applied (Δs). As horizons grow, hardware heats up and cloud costs escalate.

The Approach. Probabilistic re‑inference is replaced with a deterministic state evolution operator, g(t). Time becomes an active control signal. State advances via traversal and composition, not recomputation.

Observed results on consumer silicon

• 0.0% CPU delta above the ~17.2% baseline system tax (validated at 15M–25M nodes)

• Ambient thermal behavior with no thermal ramping under sustained operation

• Density validation: ~687 bytes per node, establishing a path to billion‑node local memory within a fixed 1 TiB envelope

Why this matters. For robotics and edge systems, memory becomes a persistent semantic substrate—not a heat source. Long‑horizon learning without thermal throttling or safety‑critical latency spikes becomes physically viable.

Disclosure & IP. The paper establishes invariant‑level mathematics and empirical existence. Core architecture, including the g(t) operator mechanics and compression heuristics, is protected under a filed Utility Patent and multiple provisionals. Constructive details are available for restricted technical diligence under NDA.

(lossless recall)

(scale-flat)

(17.2% steady-state • ΔT undetectable)

Kindred Test Harness

Evaluation Notice. Kindred is not a product and is not intended for public release. It exists solely as a controlled evaluation environment for benchmarking, instrumentation, and reproducibility.

Cross‑Model Memory Propagation (OpenAI + Gemini)

We built an internal test harness (Kindred) that couples the OPAL | ONE engine to multiple LLM APIs and measures how identical prompts write into the same deterministic, addressable memory substrate over time. The project name reflects the coupling between the substrate and upstream models during evaluation.

Observed results

Protocol (controls + logging)

Same substrate config, same prompt set, same write pathway. Only the upstream model varies. Each run is recorded with a run ID and deterministic node IDs, plus timestamped write/edge traces and snapshot hashes for replay.

Metrics instrumented

• Node delta per prompt (creation rate) and edge churn

• Retrieval stability (top‑k overlap / rank stability across repeats)

• Continuity‑repair rate (explicit “rehash” turns per session)

Interpretation boundary

This isolates propagation behavior under identical substrate constraints. It does not claim model superiority or attribute causality beyond what the logged signals directly measure.

With identical prompts, each model produced distinct clustering patterns in retained nodes while writing through the same substrate and pathway.

Clustering emerged without manual tags or predefined semantic schemas; write patterns diverged by model.

As the graph accumulated and retrieval stabilized, repeated continuity‑repair (“rehash”) turns tended to decline in‑session—a measurable signal the harness can quantify without claims of causality.

Instrumentation is being tightened (write gates, retrieval scoring, topology stability metrics). As runs are validated and releasable, we will publish periodic, benchmarked updates with the exact protocol + measured outputs.

Founder Note

Note. Click the image to view at full resolution without leaving the page.

I set out to build a deterministic memory substrate after realizing the behavior I needed could not be layered onto existing systems. The constraints were not algorithmic; they were architectural. If memory was going to be deterministic, persistent, and stable under pressure, the substrate itself had to be designed from first principles.

This was not about making models smarter. It was about making memory real.

The objective was explicit: state that persists without inference, traverses deterministically, and remains coherent as new information propagates. No probabilistic replay. No reconstruction. No orphaned nodes.

As the system evolved, compression increased naturally as information propagated. In live testing, the active graph reached tens of millions of nodes with zero dropped state, fully deterministic traversal, and stable latency (~0.32 ms). Measured memory density converged to ~687 bytes per node, implying a theoretical capacity of ~1.6 billion nodes per TiB under the same constraints.

These properties are present today under sustained operation, not projected, simulated, or inferred.

The most unexpected result was not speed or density, but thermals. Under sustained traversal, CPU utilization flattened and the system ran cold. External calls decreased as state became locally available. Once information existed, it no longer needed to be recomputed.

The turning point was not performance. It was the moment memory stopped behaving like something the system had to reconstruct. Once state boundaries were constrained correctly, inference could be reduced—or fully disabled—without coherence collapsing. Memory stopped being simulated and became infrastructure.

Most systems rely on intelligence to compensate for fragile memory. This takes the opposite approach: make memory solid, and intelligence no longer has to fight the substrate. The result is not louder behavior or larger demos. It is quieter systems. Fewer calls. Low heat. Zero drift. Continuity by construction.

Opal One is intentionally positioned outside the hot path. It does not sit inside inference loops, schedulers, kernels, or control code. It activates only where systems would otherwise rebuild context, re‑embed state, or re‑infer what already exists. When Opal is present, redundant work is removed. When it is absent, systems fall back to normal cold operation.

2026 inaugurates the compute ice age. Persistent state runs cold. Systems hold.

Robotic & Autonomous Systems — No Resets

“Robotic systems don’t fail because they lack intelligence. They fail when continuity is lost. Persistent, local state turns environments into remembered space instead of repeatedly re‑interpreted signals.”

Robotic and autonomous systems fail when continuity depends on cloud round‑trips. Sensor data is uploaded, inference and learning occur remotely, and updated state is sent back into the control loop. When bandwidth throttles, latency spikes, or connectivity drops, systems operate on stale state—causing hesitation, instability, or full mission resets.

Common failure modes in deployed autonomy stacks

1. Cloud‑coupled memory: learned priors, maps, goals, and constraints live off‑device and must be re‑fetched or reconstructed after interruption.

2. Latency‑bound recovery: state rehydration depends on round‑trip inference, introducing delay and power spikes during recovery windows.

3. Unstable replay: when memory is implicit or transient, identical scenarios do not reliably reconstruct the same internal state.

OPAL | ONE removes continuity from the network path

Opal persists semantic and operational state locally in a deterministic, addressable graph. After restart or degradation, recovery is a bounded read and traversal operation—not a cloud‑dependent re‑inference loop. Control continuity remains on‑device.

This shifts the role of the cloud. Training, aggregation, and analytics move off the critical control path and become asynchronous. Bandwidth throttling affects update velocity, not coherence. As local graphs scale into the tens of millions of nodes, learning compounds in place and behavior stabilizes without waiting on round trips.

A paradigm shift in cloud AI

Cloud AI has been optimized for faster inference.

The real bottleneck is repeated reconstruction.

Most AI systems rebuild semantic context continuously—prompt stuffing, embedding regeneration, and re‑inference—at every interaction or control cycle. Compute is spent reconstructing state the system already had, not advancing capability. At scale, this loop drives escalating GPU utilization, thermal load, and brittle behavior under throttling, outages, or network variance.

Opal | One removes the need to rebuild state.

Semantic memory is encoded once, persisted deterministically, and traversed as a stable substrate. Meaning exists as addressable state—available locally—rather than repeatedly approximated through probabilistic replay.

The result is not faster inference, but less inference. Steady‑state operation collapses into predictable traversal: prompts shrink, external calls drop, thermals flatten, and continuity holds under sustained load.

Reference results

| Graph Size (Nodes) | Compression | Recall | Traversal |

|---|---|---|---|

| ~106 | 96.8% | Lossless | ~0.29 ms |

| ~107 | 97.1% | Lossless | ~0.31 ms |

| ~108 | 97.2% | Lossless | ~0.32 ms |

New, reproducible benchmark runs from the Kindred test harness (cross‑model propagation + stability metrics). As instrumentation tightens, we will publish protocol details and measured outputs as results are validated and releasable.

We are currently building a live demonstration environment to validate architecture scope and measured claims. This is a live substrate testing harness activated by requested testing tokens. This update will be live shortly. If you are interested in receiving a token for a free evaluation harness test, please use the Reach Out button below.

Updated licensing and evaluation path for qualified partners, plus the funding roadmap that supports expanded benchmarking, documentation, and integration support.

The Space Race: Cooling the AI Cloud

AI cloud providers are in a modern space race: more inference, more throughput, more density — and relentless pressure to keep silicon within safe thermal limits. The constraint is not just compute; it’s power delivery, heat removal, and the cost of sustained cooling at scale. Opal One targets the waste inside the loop by preserving deterministic semantic state so systems do not repeatedly rebuild the same context. When redundant work is removed, less energy turns into heat — which reduces thermal load, lowers the cooling burden, and eases hardware pressure under steady-state operation. Modeled visualization: the chart below uses a public baseline for U.S. data-center electricity use and applies a user-controlled annual growth rate to show how demand can scale over time. Real-world outcomes vary by workload, hardware, and deployment.

U.S. primary energy consumption (2023): 93.59 quads (≈ 9.87×1019 J/year). Data centers (DOE/LBNL 2023): 176 TWh/year (≈ 6.34×1017 J/year). Source: DOE/LBNL; EIA for primary energy. Conversion: 1 TWh = 3.6×1015 J.

In conventional AI systems, a large share of energy consumption is driven by repeated model inference, embedding regeneration, memory bandwidth pressure, and the thermal overhead required to sustain those cycles. Industry analyses consistently show inference and data movement as dominant contributors to AI power draw.

Opal One’s benchmarks demonstrate deterministic compression and preserved semantic state, allowing systems to avoid repeated re‑inference during steady‑state operation. When inference‑driven compute is reduced, overall system energy demand can decrease proportionally.

The slider explores modeled scenarios only. For example, if inference accounts for a large fraction of total energy use, eliminating that work could yield up to ~25% system‑level energy reduction, depending on workload mix and deployment scale. These are not empirical guarantees.

Context sources: U.S. DOE data center energy reports; industry analyses from Google, NVIDIA, and McKinsey identifying inference, memory bandwidth, and thermal management as primary AI energy drivers. Measured metrics (Joules/query, $/hour, thermal envelope) will replace this model once instrumentation is complete.